Using Logs to Tell The Story of Your System in Production.

Stop using logs just for debugging. Learn how production logs reveal system behavior, performance trends, and hidden dependencies through narrative analysis.

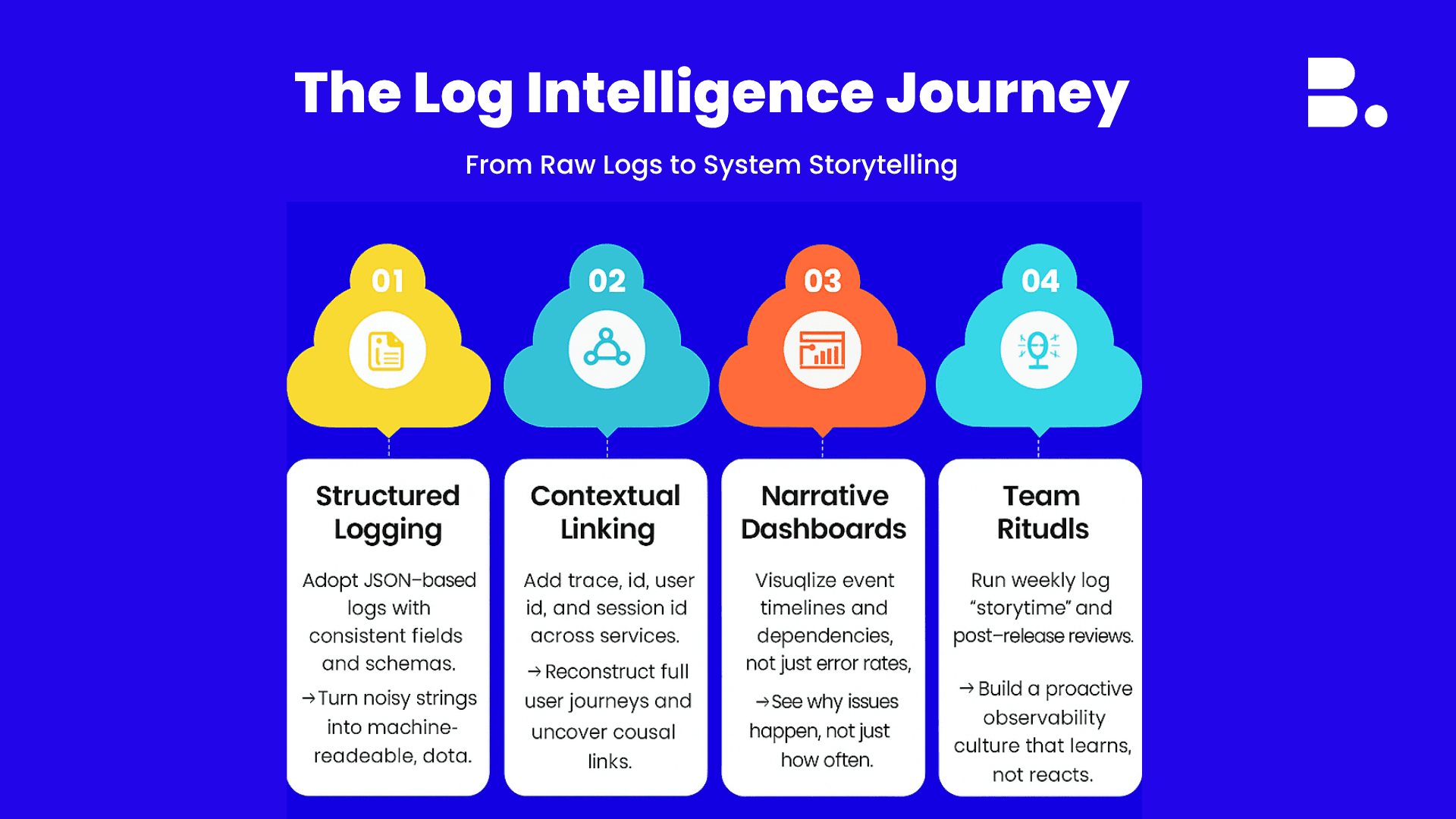

TL;DR:Your production logs already contain the complete story of how your system behaves under real-world conditions, most teams just don't know how to read them. Stop treating logs as post-mortem tools for debugging failures. Start using them as narrative instruments for system observability through logging that reveals performance trends, retry patterns, hidden service dependencies, and user journey bottlenecks. The shift requires four concrete actions: implement structured logging with consistent JSON fields, add contextual IDs (trace IDs, user session IDs) to link events across services, build narrative dashboards that visualize sequences instead of just error rates, and create team rituals like "storytime retrospectives" where engineers interpret patterns together. Great engineering teams don't just react to logs. They interpret them, asking, "What's the story behind this pattern?" This transforms logs from debugging breadcrumbs into competitive intelligence for building more resilient systems. |

The Story Your Logs Are Already Writing

Your system crashed at 3 AM. You're grepping through 50,000 log lines searching for the error. Twenty minutes later, you find it: NullPointerException in PaymentService line 247.

You fix the bug. You deploy the patch. The alert stops firing.

But here's what you missed: In the two hours before that crash, your logs recorded 147 retry attempts from a specific third-party API, a 300% spike in database connection timeouts, and a cascading failure pattern across three supposedly independent microservices. Your logs were screaming the story of what was about to happen. You just weren't listening.

Most engineering teams only open their logging dashboard when something breaks. Logs become the digital equivalent of a black box recorder, useful only after the crash, examined only during the post-mortem.

But what if your logs could tell you why things worked, not just why they failed?

The truth is, every API request, every user action, every database query, every retry, every timeout, it's all being recorded in real time. Hidden inside that continuous narrative are insights that no metric dashboard can surface: why latency spikes during specific workflows, which user patterns trigger edge cases, where unexpected coupling exists between services, and how your architecture actually behaves under real load.

This isn't about adding more logging. It's about reading what's already there differently, transforming production logging insights into strategic intelligence for building more resilient systems.

What Does "Logs as System Storytelling" Actually Mean?

1. Every Production System Has a Living Narrative

Think of your production environment as a theater with thousands of scenes playing simultaneously. A user signs up. An API request flows through three microservices. A payment webhook triggers a background job. A cache expires, forcing a database query. A third-party service returns a 429 status code.

Each moment is a scene. Each log line captures a decision, a path taken, an outcome produced. When viewed in isolation, a single log entry is just a fact: "Payment processed successfully in 320ms". But when you connect the dots, signup → email verification → cart checkout → payment attempt → retry → success, you're watching a complete story unfold.

This is understanding systems through logs at its core: seeing the narrative arc, not just the discrete events.

2. Logs Record Intent, Decisions, and Context, Not Just Outcomes

Traditional monitoring focuses on what went wrong. Logs, when used narratively, reveal:

User intent: What was the user trying to accomplish?

System decisions: Which code paths executed? Which services were called? What fallback strategies triggered?

Environmental context: What was the load? What was the state of dependencies? Were rate limits approaching?

Outcomes and side effects: Did it succeed? How long did it take? What downstream impacts occurred?

This richness is why logs are irreplaceable for system observability through logging, they capture the "why" behind system behavior, not just the "what."

3. The Fundamental Shift: From Debugging to Discovery

Most engineers are trained to use logs reactively: something breaks, you search for errors, you find the root cause. But proactive log analysis for system behavior reveals patterns before they become incidents:

Why does checkout latency spike every Tuesday at 2 PM?

Why do 30% of mobile users abandon signup at step three?

Why is this "non-critical" background service suddenly handling 10x traffic?

This shift, from firefighting to pattern recognition, is what separates reactive teams from resilient teams.

The Real Cost of Treating Logs as Just Error Trackers

When you only look at logs during incidents, you're losing critical intelligence every single day. Here's what reactive-only logging costs your team:

1. You Miss the Warning Signs Before Incidents

Production failures rarely happen instantly. They build up: retry rates creep higher, latency increases gradually, and connection pools saturate slowly. By the time your alert fires, you're in crisis mode. But the logs were showing the pattern for hours, you just weren't watching.

2. You Can't Identify Root Causes, Only Symptoms

Logs that say "Database connection timeout" tell you what happened. But without the narrative, what happened in the 15 minutes before the timeout, you can't answer why. You end up treating symptoms (restarting services, scaling up resources) instead of fixing root causes (a hidden n+1 query pattern, connection pool misconfiguration, or upstream service degradation).

3. You Miss Architectural Insights That Could Prevent Future Issues

Your architecture diagram shows Service A and Service B as independent. But your logs reveal they share a Redis cache, and when Service A's load spikes, Service B's performance degrades. This hidden coupling is invisible in metrics but obvious in log analysis for system behavior.

4. You Waste Engineering Time on Repetitive Debugging

Without narrative context, engineers debug the same class of issues repeatedly. Each incident feels new, but the logs would show it's the same pattern: retry storms during third-party API maintenance windows, memory leaks that correlate with specific user workflows, cascading failures triggered by the same edge case.

The cost isn't just downtime. It's lost velocity, repeated mistakes, and architectural blind spots that compound over time.

The real impact of narrative logging becomes clear when you see it in action. Here's how one engineering team turned its logs from noise into a competitive advantage.

Case Study: How a FinTech Team Found a Hidden Throttling Pattern In Production Logs.

A fintech startup faced a persistent 5% payment failure rate with generic timeout errors. One engineer traced individual journeys using trace IDs and discovered 70% of failures occurred during 9-11 AM, all showing HTTP 429 (rate limited) from their payment gateway before timing out, an undocumented throttling policy during peak hours.

By implementing exponential backoff and circuit breakers, failures dropped from 5% to 0.2%. The lesson: This system behavior insight only emerged by treating logs as narratives, not error lists.

The 4-Step Framework to Transform Logs Into System Intelligence

You don't need expensive tools or massive refactoring to start reading your system's story. Here's the practical framework for implementing system observability through logging:

1. Implement Structured Logging with Consistent Fields

The Core Principle: Replace ad-hoc string logs with machine-readable JSON using standardized fields across all services.

Why This Matters:

Unstructured logs like "User payment failed" are forensic dead-ends. You can't filter by user, trace requests across services, or aggregate patterns. Structured logs with consistent fields transform logs from debug output into queryable data.

Add Context-Rich Optional Fields:

endpoint: The API route or functionhttp_status: Response codes (200, 429, 500)error_code: Your internal error taxonomyretry_attempt: Which retry iteration (1, 2, 3)duration_ms: How long the operation tookmetadata: Any domain-specific context (payment_amount, item_count, etc.)

Choose Libraries That Enforce Structure:

Node.js: Winston, Pino

Python: structlog, python-json-logger

Java: Logback with JSON encoder, Log4j2

Go: zap, zerolog

What This Single Log Tells You:

A payment retry occurred (not the first attempt, it's retry #2)

The gateway returned a rate limit (429)

It took 1.5 seconds

It's part of a larger request (trace_id links to other events)

It's for a specific user (enabling journey reconstruction)

The Payoff: With structured logs, you can now query: "Show me all payment_gateway_retry events where http_status=429 between 9-11 AM" and instantly see patterns that were invisible in string logs.

2. Add Contextual IDs to Link Events Across Services

The Core Principle: Inject unique identifiers that follow a single request through your entire distributed system, linking isolated events into coherent narratives.

Why This Matters:

Without contextual linking, you have thousands of isolated events. You can see that Service A had 100 errors and Service B had 50 timeouts, but you can't answer: "Are these related? Is Service A's failure causing Service B's timeouts?"

Contextual IDs let you reconstruct complete user journeys: "User 789 signed up → verified email → added three items to cart → initiated payment → encountered rate limit → retried successfully."

The Implementation Blueprint:

1. Trace IDs (Request Correlation Across Services):

Generate a unique

trace_id(UUID) at your API gateway or entry pointPropagate it through ALL downstream services via HTTP headers (

X-Trace-Id)Include it in every log entry related to that request

Use W3C Trace Context standard or OpenTelemetry for automatic propagation

2. User/Session IDs (Journey Tracking Over Time):

Include

user_idin every log for authenticated actionsInclude

session_idfor anonymous user trackingThis lets you trace user behavior across multiple requests: login → browse → checkout

3. Request IDs (Single Service Correlation):

Generate a

request_idfor each incoming API callUseful for debugging within a single service before you have full distributed tracing

The Payoff in Action:

Remember the fintech case study? Here's how contextual IDs revealed the throttling pattern:

Query: trace_id="abc-123-xyz"

Result: Complete payment journey reconstruction:

09:15:40 - user_789 initiated checkout (trace_id: abc-123-xyz)

09:15:41 - payment_service called gateway (trace_id: abc-123-xyz)

09:15:42 - gateway returned 429 rate limited (trace_id: abc-123-xyz)

09:15:42 - retry scheduled in 15 min (trace_id: abc-123-xyz)

09:30:43 - retry attempt #2 (trace_id: abc-123-xyz)

09:30:44 - payment succeeded (trace_id: abc-123-xyz)

Without trace_id, these look like random events. With it, you see the exact sequence: call → rate limit → scheduled retry → eventual success. This is understanding systems through logs narratively.

Tool Recommendation: Implement OpenTelemetry for automatic context propagation. It handles trace ID generation, header injection, and integrates with logging libraries.

3. Build Narrative Dashboards That Visualize Sequences, Not Just Metrics

The Core Principle: Create dashboards that show event timelines and causal relationships, not just aggregate counts or percentiles.

Why This Matters:

A dashboard showing "Error Rate: 2.5%" tells you nothing about causation. You know errors exist, but not why, not which user journeys are breaking, and not what sequence of events led to the failure.

A narrative dashboard shows: "145 payment attempts hit third-party rate limit between 9-11 AM → triggered average 2.3 retries each → 87% eventual success → 13% abandoned checkout". Now you understand the full story and can take action.

The Implementation Blueprint:

Dashboard Type #1: User Journey Failure Analysis

What to visualize:

Top 10 user journeys that end in errors (e.g., "signup → email verification → payment → error")

Conversion funnel with dropout points

Time-to-failure distribution (do errors happen immediately or after retries?)

Dashboard Type #2: Service Dependency Chain Visualization

What to visualize:

Service call sequences with >3 retries (reveals flaky dependencies)

Cascade failures: "Service A slow → Service B timeout → Service C circuit breaker open"

Cross-service latency breakdown by trace ID

Tools: Jaeger or Zipkin for distributed trace visualization combined with log drill-down

Dashboard Type #3: Incident Timeline ("The 15 Minutes Before")

What to visualize:

Event timeline leading to SLA breach

What services showed warning signs first?

Which thresholds were crossed in what order?

Real Example (Better Than Generic Metrics):

Traditional Metric Dashboard:

Payment Service Errors: 145

Error Rate: 2.5%

P95 Latency: 320ms

What you know: Errors exist. What you DON'T know: Why? Which user flows? What's the pattern?

Narrative Dashboard:

PATTERN DETECTED: Payment Gateway Throttling

- 145 payment attempts hit rate limit (HTTP 429)

- Time window: 9:00-11:00 AM EST

- Affected gateway: Stripe

- Retry pattern: Average 2.3 attempts per transaction

- Eventual success rate: 87%

- User impact: 13% checkout abandonment

- Recommended action: Implement circuit breaker + negotiate higher rate limit

What you know: The complete story, root cause, and next action.

Implementation Tip: Start with one critical flow (e.g., checkout). Build a dashboard showing its complete timeline: frontend click → API gateway → auth check → inventory check → payment → confirmation. Add filters by trace_id, user_id, and error_code. Iterate from there.

4. Create Team Rituals for Log Interpretation (Not Just Incident Response)

The Core Principle: Schedule regular sessions where engineers proactively explore logs to discover patterns, rather than just reacting to alerts.

Why This Matters:

If you only look at logs during 3 AM incidents, you're in permanent firefighting mode. Proactive log exploration builds system intuition, uncovers optimization opportunities, and catches issues before they become outages.

The Implementation Blueprint:

Ritual #1: Weekly "Storytime" Sessions (30 minutes)

Format:

Pick one critical user flow (checkout, signup, data import)

As a team, pull up production logs filtered by recent

trace_idexamplesWalk through the journey step by step

Questions to ask:

What surprised us? (Unexpected service calls, longer-than-expected latencies)

Where are hidden dependencies? (Service A calls Service B indirectly via cache)

What failure modes exist? (Retry loops, timeout cascades, race conditions)

What would break if X failed? (Dependencies we didn't document)

Output: Document findings in your team wiki. Add monitoring for newly discovered edge cases.

Ritual #2: Post-Release Log Reviews (20 minutes after every deploy)

Format:

Immediately after deployment, gather the team

Filter logs to show only traffic hitting the new code paths

Look for anomalies: new error messages, latency changes, unexpected retry rates

Questions to ask:

Are new code paths executing as expected?

Did latency patterns change? (Compare to pre-deploy baseline)

Are there unexpected retry attempts or fallback triggers?

What user behaviors are we not handling gracefully?

Output: Catch regressions early. Create tickets for unexpected behavior before it scales.

Ritual #3: Monthly "Pattern Discovery" Retrospectives (60 minutes)

Format:

Review the last 30 days of log analysis for system behavior trends

Use dashboards from Step #3 to identify slow-moving patterns

Questions to ask:

Which endpoints are getting slower over time?

Which error messages are increasing month-over-month?

What user behaviors are we seeing more of? (Mobile traffic up 40%?)

Where are we approaching capacity limits? (Connection pool saturation trends)

Output: Proactive capacity planning. Architecture decisions based on real usage patterns.

The Cultural Shift:

Train engineers to ask: "What is my system trying to tell me?" instead of "Where's the error?"

This single question transforms log analysis for system behavior from a reactive debugging chore into strategic intelligence gathering. Engineers start seeing logs as:

Early warning systems (not just post-mortems)

Product usage insights (not just error trackers)

Architecture documentation (how the system actually works vs. how we think it works)

Success Metric: When engineers voluntarily explore logs outside of incidents, you've built the right culture.

How To Build a Team Culture Around Log-Driven Curiosity.

Implementing the framework is the easy part. The harder challenge is shifting the team's mindset from reactive debugging to proactive curiosity. Here's how to build a culture where engineers naturally think narratively:

1. Make Logs Accessible to Everyone, Not Just DevOps

Your logging tools shouldn't require specialized training to use. Product managers, designers, and frontend engineers all benefit from understanding how users actually interact with your system in production. Invest in tools with intuitive UIs and create documentation for common queries: "How to trace a user journey," "How to find slow API calls," "How to identify retry patterns."

2. Celebrate Discoveries, Not Just Fixes

When an engineer uncovers a performance insight or hidden dependency through log analysis for system behavior, recognize it publicly. Share the findings in team channels. Write it up in your internal wiki. This reinforces the behavior: logs aren't just for firefighting, they're for learning, improving, and building better systems.

3. Treat Logs as Documentation of Real-World Behavior

Your system's actual behavior in production is often different from what you designed on whiteboards. Logs are the ground truth, the authoritative record of how your architecture performs with real users, real load, and real edge cases. Respect them as documentation, not just debugging output.

Conclusion

Your production logs are already writing a detailed autobiography of your system's behavior. The question isn't whether the story exists; it's whether you're reading it.

By shifting from reactive debugging to narrative analysis, you unlock a deeper level of system observability through logging. You stop asking "What broke?" and start asking "How does my system actually behave? What patterns exist? What is it trying to tell me?" This transforms logs from noise into competitive intelligence.

The teams that master this narrative approach don't just build faster, they build smarter. They catch problems before they become incidents. They understand their architecture more deeply than their documentation suggests. And they make better decisions because they're listening to what their systems are actually saying.

At Better Software, we build systems with observability baked in from day one, not as an afterthought, but as a core engineering principle. We've spent over seven years helping startups create foundations that tell clear stories, making debugging faster and architecture decisions smarter.

Ready to build a system that speaks clearly? Book your free 30-minute Build Strategy Call and discover how we transform observability into a competitive advantage.

SummaryProduction logs are more than debugging tools; they're your system's living narrative. By treating logs as storytelling instruments, engineering teams discover performance trends, hidden dependencies, user journey bottlenecks, and reliability patterns invisible to traditional metrics. The shift from reactive debugging to proactive system observability through logging requires four actions: implement structured logging with consistent JSON fields, add contextual IDs (trace and session IDs) to link events across services, build narrative dashboards that visualize event sequences, and create team rituals like "storytime retrospectives" for collaborative pattern discovery. |

FAQ

1. What is system observability through logging?

System observability through logging is the practice of using structured log data to gain deep visibility into how production systems behave, perform, and interact with users. Unlike reactive debugging, it focuses on narrative analysis, connecting events across time and services to reveal patterns, dependencies, and trends that metrics alone cannot show.

2. How are logs different from metrics and distributed traces?

Metrics provide aggregate data (error rates, latency percentiles) that show what is happening. Traces visualize request flows across services, showing where time is spent. Logs capture detailed event-level context, the "why" behind system behavior. Effective observability uses all three together: metrics for alerts, traces for flow visualization, and logs for root cause narrative.

3. What makes structured logging better than traditional string-based logs?

Structured logging uses a consistent, machine-readable format (typically JSON) with standardized fields like timestamp, service name, trace ID, and user ID. This enables programmatic filtering, correlation, and analysis at scale. Traditional string logs like "User payment failed" lack the context and consistency needed for narrative analysis or automated pattern detection.

4. What are contextual IDs, and why do they matter for log storytelling?

Contextual IDs (trace IDs, user session IDs, request IDs) are unique identifiers that link related events across different services and timestamps. They let you reconstruct complete journeys: "Show me every event for trace_id=xyz" reveals the entire request flow from user action to system outcome, transforming isolated log lines into coherent narratives.

5. Can I implement log storytelling without expensive APM tools?

Yes. You can start with free or low-cost tools like the ELK Stack (Elasticsearch, Logstash, Kibana), CloudWatch Logs Insights, or open-source options like Grafana Loki. The key requirements are: log aggregation with search capabilities, structured log parsing, and the ability to filter by contextual IDs. Advanced features like distributed tracing help, but aren't mandatory.

6. How do you discover hidden dependencies between microservices using logs?

Filter logs by time windows during incidents or load spikes, then look for correlation patterns. Example: "Every time Service A's request rate spikes above 1000/min, Service B's database connection pool saturates within 5 minutes." This reveals hidden coupling; perhaps they share a cache, queue, or database that creates unexpected interdependencies.

7. What log levels should production systems use?

Use INFO for significant business events (user signup, payment processed), WARN for recoverable errors or degraded states (retry triggered, fallback used), ERROR for failures requiring investigation, and DEBUG only in specific subsystems when actively troubleshooting. Avoid DEBUG in production globally; it creates noise and storage costs without narrative value.

8. How much does it cost to store production logs at scale?

Costs vary by volume and retention. CloudWatch Logs charges ~$0.50/GB ingested and $0.03/GB/month stored. Elasticsearch on AWS costs $0.10-0.30/hour per node. Datadog charges per GB ingested with tiered pricing. Optimize costs by: using appropriate log levels, sampling high-volume debug logs, compressing old logs, and archiving to cheaper storage (S3) after 30-90 days.

9. How long should you retain production logs?

Retain recent logs (7-30 days) in hot storage for fast queries and active investigations. Archive 30-90 day logs to warm storage for compliance and trend analysis. Keep 90+ day logs in cold storage (S3, Glacier) for regulatory requirements. Balance retention with cost: most production insights come from recent logs, while compliance drives long-term retention.

10. What's the difference between logging and Application Performance Monitoring (APM)?

Logging captures discrete events with context (what happened, when, why). APM tools (New Relic, Datadog APM, AppDynamics) automatically instrument code to track request traces, transaction times, and resource usage. APM excels at visualizing request flows and identifying bottlenecks; logging excels at detailed forensics and custom event tracking. Use both: APM for real-time performance visibility, logs for deep investigations.